I’m working on a game where you throw stacks of your own team-mates in first-person, in various game-modes. I tried a few different approaches for player AI, but ended up settling on Utility for “what to do” and GOAP (Goal Oriented Action Programming) for “how to do it”. Each of these ( Utility AI and GOAP AI note) are worth watching videos on, they’re extremely cool.

Utility AI was popularised by Dave Mark, and was used in Guild Wars 2 (or an expansion). For each AI, each of it’s actions is scored on how useful it would be to perform at this moment, based on the world state, and custom multipliers/curves. The highest-scoring action is then performed. This is a beautiful system. You can cache the world-state values for multiple actions and AI, and the scoring is just multiplication and addition, so it’s very cheap. You can switch out multiplying against fixed weights for multiplying against a curve, which gives your AI designers much more elegant control. I found this works well for goals, or for non-complex AI, but falls down (for me) when trying to use it for each granular action a player-AI might use. Keeping all the different values (or strata of values) in your head to roughly control what happens, becomes difficult. I know that Dave delivers some custom GUIs with his implementations, which may reduce this problem (easy AI design is all about your tooling).

Enter GOAP AI. GOAP was popularised by Jeff Orkin in the 2005 videogame F.E.A.R. Simply:

It’s A* pathfinding, except the destination is your desired world state, other nodes are

intermediate world-states, and the edges are actions. This is the AI system that feels

most right - when you use it, it feels like what you imagine AI design should feel like

when you first started thinking about making videogames. Each world state is defined by

a bunch of world properties and their state (bools are simplest but I’d like to see more

complex systems like ints with comparisons). E.g. WS.HoldingAtLeast3 = False,

WS.OverlappingGoal = False, and WS.Offside = False. Then you define your desired world

state (e.g. WS.OverlappingGoal = True), your possible action (e.g.

ThrowAtLeast3TowardsGoal), what each action requires (e.g. WS.HoldingAtLeast3 = True)

and what it results in (e.g. WS.OverlappingGoal = True). On top of that you give each

action a cost (e.g. default to 1, but e.g. PickUpAtLeast3 might have a cost of 3). You

don’t need to manually chain these together - the GOAP planner will use the actions to

find a path from the current world state to the target world state if it can, and use

the length of the action chain and the costs to minimise the total cost (e.g.

PickUpAtLeast3 => ThrowAtLeast3TowardsGoal).

I implemented the GOAP planner from scratch, based on all the readings and public reference implementations I could find, which greatly assisted my understanding of it. I initially tried using other people’s implementations but none of them fit exactly. Writing it myself, and just what I needed, made debugging a million times easier and let it fit the requirements better. I implemented A* in the process, but it’s not generic - It was easier for me to dedicate it fully to GOAP, instead of implementing an abstract A* machine with A* variable names then overriding for GOAP.

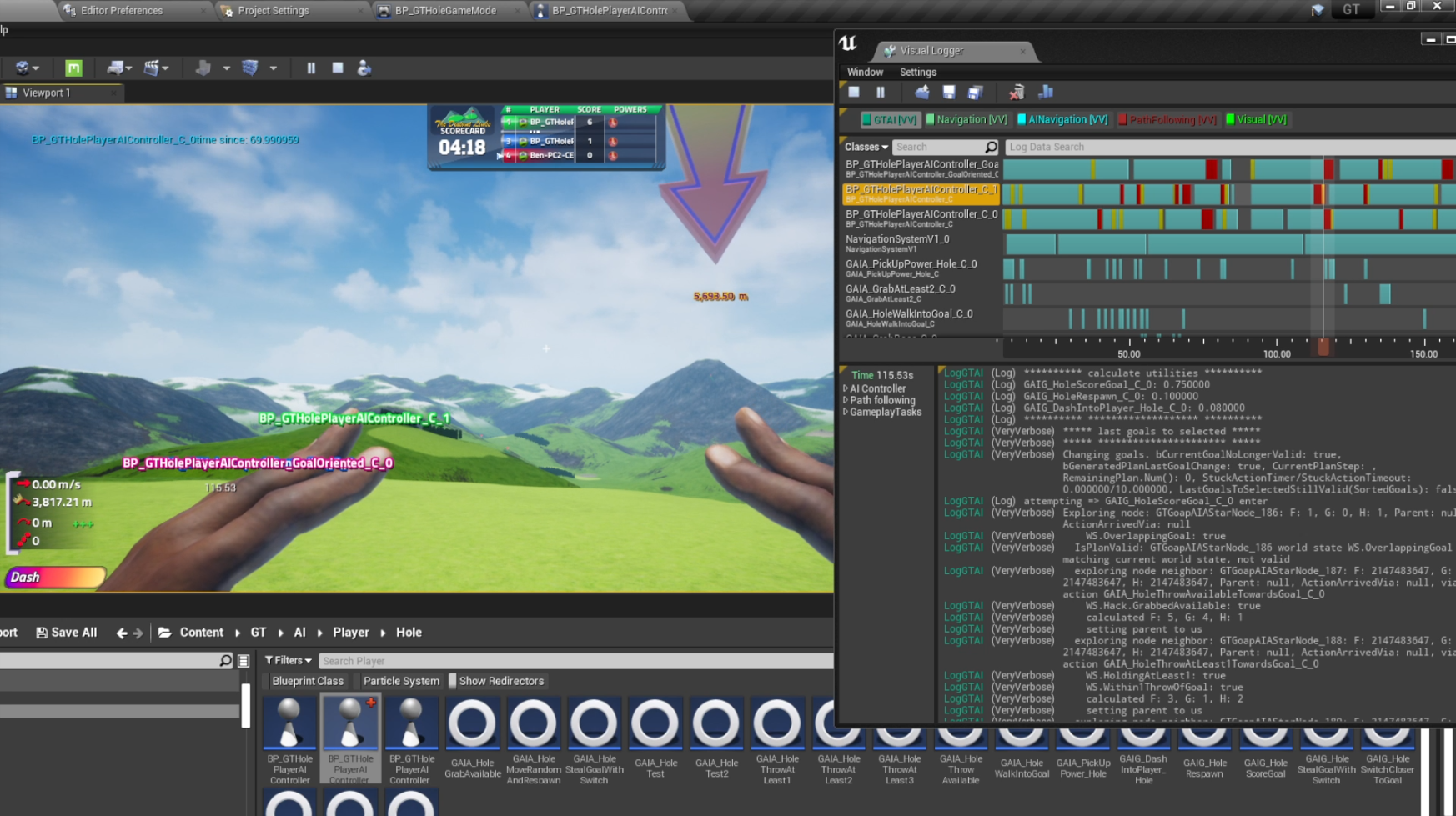

Debug output is the most critical thing to implement when creating game AI systems, and UE4 works well here: it has Visual Logger, which is a time-based log that you can scrub back-and-forth through, only seeing the logs from the tick you have selected. It also differentiates logs per actor, and supports verbosity levels. It’s compiled out of shipping games so it has no final performance impact either. This is where I solve all my “why can’t the planner find a path here” and “why is the planner picking that action” issues.

Other tips: I still had issues going too granular with GOAP actions at the start, so I recommend keeping your actions as coarse as possible, and only going more granular when you need to. It’s still a tool that you use with your AI designer hat on, it doesn’t do everything on its own. But the power of being able to throw in a new goal, maybe one new action, and have the existing actions solve all the other prerequisites, is amazing. Defining world properties and states is a muuuuuuch lower mental load than using utilities for actions.

I wrote it all with performance in mind, and it seems to run fine. Basically lots of caching (each world property is only evaluated once per AI per tick then re-used, shared values are cached for all then re-used, etc); eliminating invalid paths early; and searching backwards from the goal instead of forwards from the current world state. I test the game with 4 AI players on an old i3 laptop processor from ~2016 without issue.

note The second talk in this video is wild. The extensions are incredible.

Videogames

Hey, do you like videogames? If so please check out my game Grab n' Throw on Steam, and add it to your wishlist. One gamemode is like golf but on a 256 km^2 landscape, with huge throw power, powerups, and a moving hole. Another is like dodgeball crossed with soccer except instead of balls you throw stacks of your own teammates. And there's plenty more!See full gameplay on Steam!