- 1 - Intro

- 2 - Deploying the rest

Series Overview

Say you’re an organisation who’s started their cloud journey, but hasn’t fully dove (doven? dove’st?) in. You’ve got some solid business reasons that mean you now need to lean into it. You know you want to do it properly - you want to move to Infrastructure as Code and set up a modern architecture that will grow with you. What do you do?

One answer is to read through Microsoft’s documentation - like their Cloud Adoption Framework, Well-Architected Framework and Architecture library - then implement their recommended designs. The struggle is that there are a lot. Azure caters to orgs of all sizes, and these docs are often starting points or Best Practices - i.e. things you do unless you have a more specific reason to do something else.

The good news is that you can pick a few key starting points from those docs, then only scale up when you need to.

This series will walk through the foundation of one way of deploying and maintaining a modern Azure design using Infrastructure as Code. There are lots of different ways to do this because there are so many differing organisation structures and requirements. This one isn’t perfect - Bicep has some limitations at the moment. Issues will be covered along the way.

The implementation we’ll be working through is one of many options. See the Cloud Adoption Framework for some of the others.

Conway’s Law

Conway’s Law applies here. Basically - every organisation implements systems that match their org chart. Lines of communication end up creating system boundaries.

When implementing an Azure architecture - regardless of whether you’re configuring it all as code or through the Portal - design it to match your organisational structure. It’ll make things much easier later. If you’re part of a larger effort to modernise the organisation, ensure people are actually working on changing the rest of the org and not just expecting ~the cloud~ to modernise things for them.

Also design it to match your organisational size and objectives. One company’s best practice is another company’s bankruptcy. Focus on delivering value, not impressing other engineers.

Microsoft Resources

Throughout this guide we’ll reference and rely on the Microsoft resources mentioned above. It’s extremely beneficial and time-saving to learn from those that have come before you.

But also keep in mind that Microsoft doesn’t do anything out of the goodness of their own heart. All their documentation is created with the end goal of getting you to give them more money. When you read their guides you’re taking advice from the group who stands to benefit most from you deploying more cloud infrastructure! Make sure everything you implement fits your company’s requirements. Adjust when required.

Best Practices (or Not)

For the sake of time and convenience I won’t always use best practices. I’ll try to call it out when I don’t. Things like: using the latest version of a GitHub Action, instead of pinning to a specific version and reviewing changes.

Other concessions will be made for the sake of an easier examples, but the fundamentals will be there. In production you’ll have your own constraints.

The Company

Okay, lets come up with an example company we’ll use throughout the series:

Golden Syrup Manufacturing (GSM) is big enough to have an internal IT department and a few groups within that, but they’re not an F500. There’s a network group, a central IT group (maybe the network group is part of this), and developers. GSM isn’t entirely a software company - they make physical things. But in-house software, hosted, is definitely part of their offering.

Other parts of the business have run the analysis and decided that cloud is what they want. Even though an on-prem or hybrid model is usually cheaper for static, known-quantity workloads, they’ve run The Numbers and we don’t need to worry about this.

Technologies

We’ll mainly use Bicep for IaC, and GitHub for source control / pipelines. Bicep isn’t perfect yet but has the nice property of not requiring backend storage. We might take a detour into Azure DevOps later.

Structure

We’re going to set up a modified, barebones version of the Azure Enterprise-Scale architecture, or Azure Landing Zone Conceptual Architecture, using landing zones. There’s variants of this, but in our case we’ll have different management groups or subscriptions for shared non-networking things, shared networking things, and then for each workload. This will align roughly with our org boundaries: central IT, networking, app teams. We’re part of a newly formed platform team that has the responsibility of setting up the Azure estate and safely handing control of services to the teams above.

What is a Landing Zone? It’s a template. The docs take a seriously long-winded route to explaining that. It’s just a template for Azure infrastructure that works well for orgs of a certain type and scale.

What’s the difference between Azure Enterprise Scale and Azure Landing Zones? They’re two terms for the same thing. Azure Enterprise Scale is the old name.

Azure Landing Zone Conceptual Architecture

Additionally, the app-team model can be expanded to other areas of the business. If finance want to spin up a PoC we can create them another subscription/area to be costed out separately.

We’ll use the Azure Landing Zone Bicep Modules.

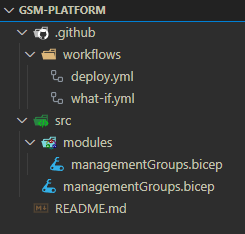

Set up the repo

We’ll start with housing the IaC for the platform we’re deploying in a single gsm-platform repo. The contents of apps in separate app landing zones will be in other repos. There are different models for this: You could have separate repos for different areas of the infrastructure - e.g. one for all the networking.

Starting with all the platform IaC code in one repo keeps things simpler, and we can migrate to multiple later if required. If you’re doing a skunkworks-kinda thing, or you’re the first cloud enablement team, you might want to start with just one.

Either way this will be a high-privileged area. Once we set up pipelines to deploy changes, changes to the repo will affect the Azure environment. In production you’d want to set up strong access control and approval to this repository.

You can follow along with the code in the repo. The below is written chronologically but you’ll be looking at the completed version of the code. It should still be understandable. If you want to follow the state of the repo at the time of writing, you can use this commit.

I’ve enabled GitHub Environments and created one called prod. This will simplify some of the

federated auth below.

Copy management template and configure

I’ve copied the managementGroups.bicep file from the ALZ-Bicep repo into our new repo

and set up a simpler directory structure:

srcholds Bicep IaC codesrc/modulesholds the Bicep modules, mainly copied from the ALZ repo- Bicep files in the root of

srcwrap thesrc/modulesmodules, and specify parameters. This keeps all the nice type checking in Bicep instead of mixing ARM-based JSON and bicep

I’ve modified the template to remove the telemetry tracking as well.

Test locally

To speed up iteration and testing, lets test this locally before codifying in a pipeline.

Permissions

To do this you’ll need to:

- elevate your privileges, even if your account set up the tenant/directory

-

Assign yourself the

OwnerIAM role to the root/Azure scope which is different to the root management group - then sign out of and back into your powershell module:

Disconnect-AzAccountandConnect-AzAccount

Testing

- Clone down the above repo

- Adjust the settings in

src/managementGroups.bicepas required - edit and run:

$inputObject = @{

DeploymentName = 'alz-MGDeployment-{0}' -f (-join (Get-Date -Format 'yyyyMMddTHHMMssffffZ')[0..63])

Location = 'Australia East'

TemplateFile = "src/managementGroups.bicep"

}

# view changes

New-AzTenantDeployment @inputObject -WhatIf

# apply

New-AzTenantDeployment @inputObject

A note on deletions

Bicep doesn’t support terraform destroy functionality fully yet. There’s a Complete

mode that works with some commands that will destroy resources if they’re not in a

definition (so you can pass a blank one) but it doesn’t work at the tenant level.

Deployment Stacks is meant to fix this but it’s not in public preview yet.

To delete this test deployment you could delete the top-level group you deployed.

Codify in a pipeline

Now that we know the deployment works we can set up the repo to deploy changes automatically, with controls.

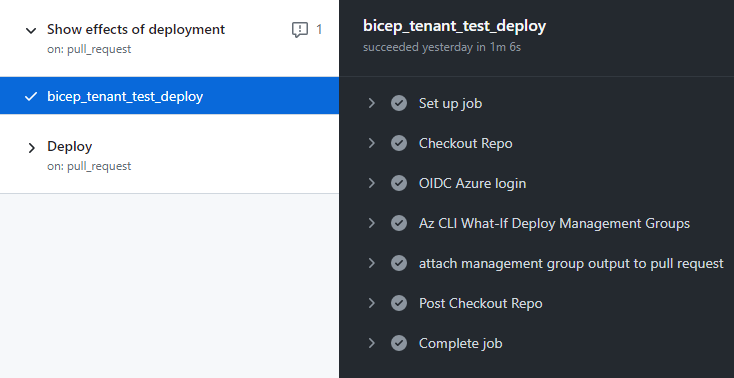

Test with what-if

I’ve copied the sample file from https://github.com/Azure/ALZ-Bicep/wiki/PipelinesGitHub

into .github/workflows/what-if.yml to start with, and modified it:

- Env variables are removed from here. I’m defaulting to keeping them in the bicep definitions because they’re the environment spec. The outer bicep file is already used to define the variables and is already separate from the module code. The only thing we’ll extract to variables here later are things that will need to change per job or per run. E.g. if you had a test environment you deployed to as well, you’d setup variables for things you need to change to get the deployment to run in that environment instead.

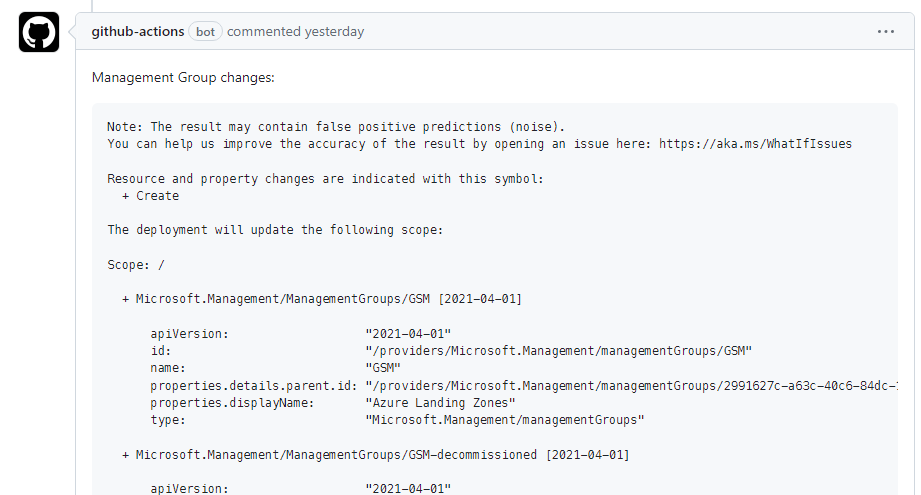

- Modified to show the effects, not deploy yet. the what-if version runs the what-if subcommand to generate the output, then attaches it to the pull request.

- Uses OIDC auth flow, not saved service principal creds. It uses the newer github actions auth method to reduce the number of creds we’re storing, and to reduce the scope of a compromise.

- Uses environments. Makes auth simpler.

Warning: my repo is public for demonstration, but I’ve enabled Require approval for all outside collaborators to prevent everyone from being able to trigger workflows with

pull requests. In your own environment you’ll want to restrict access to the repo in

some way.

Run through

this

to set up the required Azure AD account.

Scope the app permissions to the root /

level as above but use the Contributor

role.

Adjust the subject to match your repo. In this case it’s

repo:GSGBen/gsm-platform:environment:prod.

$AppName = 'GSM Platform GitHub Actions'

New-AzADApplication -DisplayName $AppName

$clientId = (Get-AzADApplication -DisplayName $AppName).AppId

New-AzADServicePrincipal -ApplicationId $clientId

$objectId = (Get-AzADServicePrincipal -DisplayName $AppName).Id

New-AzRoleAssignment -ObjectId $objectId -RoleDefinitionName Contributor -Scope "/"

(Get-AzADApplication -DisplayName $AppName).AppId

(Get-AzContext).Subscription.Id

(Get-AzContext).Subscription.TenantId

$appObjectId = (Get-AzADApplication -DisplayName $AppName).Id

# important: no trailing slash here even though it's in the docs, because GitHub presents it without it.

New-AzADAppFederatedCredential -ApplicationObjectId $appObjectId -Audience api://AzureADTokenExchange -Issuer 'https://token.actions.githubusercontent.com' -Name 'GSM-Platform-GitHub-Actions-Federation' -Subject 'repo:GSGBen/gsm-platform:environment:prod'

if you get the error AADSTS70021: No matching federated identity record found for presented assertion, ensure that all details (shown in the output of the github action

log) match, including trailing slashes.

Once set up, you can test the workflow by manually triggering it under the Actions tab in the repo, or opening a pull request. If you open a pull request you should see the expected results of the deployment get added to the pull request as a comment.

Note that the management groups require a tenant deployment, which requires az-cli or powershell directly, which requires some extra mess-arounds in the script to maintain the expected output formatting in the workflow output and the comment.

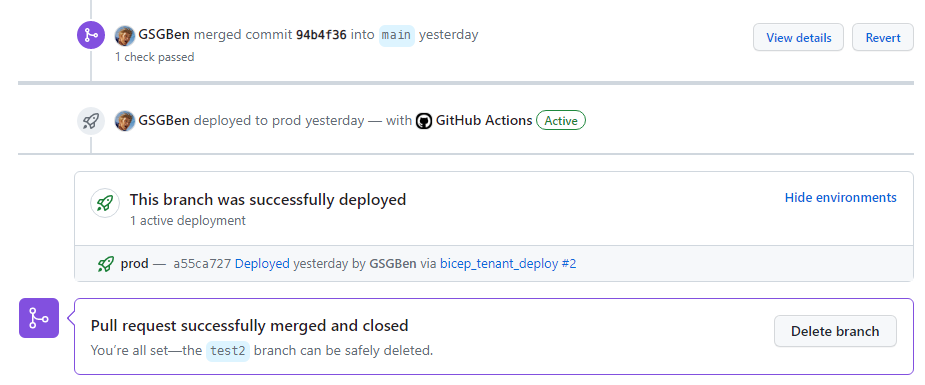

Deploy

Now that we have the what-if working, the deployment is trivial because it uses the

same permissions and a simpler workflow. I’ve copied .github/workflows/what-if.yml to

.github/workflows/deployment.yml and updated it:

- Changed

what-iftocreatein the cli call. This deploys the IaC. - Only run when manually requested or when a pull request is merged. The what-if

runs every time a PR is changed, the deployment runs when it’s merged into main. Note

that the merged status is checked under each job in an

ifblock, not in the high-levelonblock. If you add more jobs here you’ll need to duplicate theifcheck. - Removed the PR-comment-posting. GitHub will automatically show a

deployment in progressmessage with link to the result and whether it failed or not.

Note that this isn’t perfect yet: you’d probably want a rollback strategy, or a don’t-merge-until-successful process. I’m leaving it as-is for now to save time. We might revisit it later.

Also note, this doesn’t handle renames or deletes. For Bicep support you’ll need to wait for Deployment Stacks for that. In the meantime you could check the output (I recommend powershell instead of az-cli, for easier object-based process) and rename/remove them. Otherwise could use Terraform, but I’m not sure if it uses unchanging-IDs per management group or also uses the named-and-visible Azure ones like Bicep.

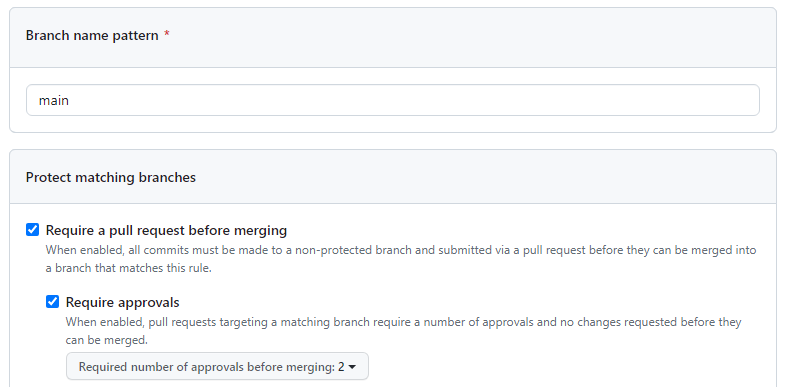

To add controls, you can either use Branch Protection Rules, Environment Protection Rules, or a mix of both. A common option is requiring approvers:

One reason for splitting IaC into multiple Environments or reviewers would be to reduce the controls for less critical infrastructure areas, allowing more efficient deployments where there’s less risk.

In the next article we’ll extend the deployment to include the rest of the platform landing zone resources.

Videogames

Hey, do you like videogames? If so please check out my game Grab n' Throw on Steam, and add it to your wishlist. One gamemode is like golf but on a 256 km^2 landscape, with huge throw power, powerups, and a moving hole. Another is like dodgeball crossed with soccer except instead of balls you throw stacks of your own teammates. And there's plenty more!See full gameplay on Steam!